Griffin Pitt, right, works with two other student researchers to test the conductivity, total dissolved solids, salinity, and temperature of water below a sand dam in Kenya.

(Image: Courtesy of Griffin Pitt)

5 min. read

As members of the inaugural AI x Science Postdoctoral Fellowship, Brynn Sherman and Kieran Murphy are already reaping the rewards of cross discipline collaboration—testing new ideas quickly and learning new research languages. The new fellowship program, offered through the School of Arts & Sciences (SAS) and the School of Engineering and Applied Science (SEAS), provides mentorship and peer engagement opportunities.

“AI is a part of doing research in the sciences now,” says Colin Twomey, the executive director of the School of Arts & Sciences’ Data Driven Discovery Initiative (DDDI) who oversees the new program. “There’s this growing recognition that there are experts who have scientific research goals here at Penn that want to use these AI tools, and there are folks with expertise in building them, so we thought, ‘Why not get them together, get them communicating, and eventually, collaborating?’”

As a cognitive neuroscientist, Sherman studies how the human brain forms and recalls memories, and she’s long been fascinated by how our experiences get encoded and organized. Through the AI fellowship, she teamed up with linguist and DDDI fellow Sarah Lee to tackle a novel research question: How does the narrative structure of events—like the plot of a TV show—shape what we remember?

Sherman co-authored a study with Lee of the Department of Linguistics that tested viewers’ memory of popular series such as “Game of Thrones,” “Stranger Things,” and “The Last of Us,” probing how well people recall the ordering of events.

“We were trying to understand how people’s memories of experiences over months or years are shaped by the structure of events,” she explains. In the study, participants were given brief text prompts reminding them of scenes and storylines, then asked about what happened when. By analyzing what people got right or wrong, the team could see how a season’s narrative arc helped (or hindered) their memory of specific moments.

Crucially, this project was a team effort that spanned disciplines. “Sarah has an interest in event representation from the angle of linguistics, whereas I had an interest in events from the perspective of perception and memory,” Sherman says of Lee.

Lee’s expertise helped ensure that the textual prompts were carefully controlled from a language standpoint—for instance, consistent phrasing was used to describe events so as not to bias memory retrieval. Meanwhile, Sherman—with expertise in how event structure and prior knowledge influence the encoding of new episodes—brought a cognitive science lens. The study was also in collaboration with former MindCORE postdoctoral fellow Sami Yousif, as well as Anna Papafragou, professor of linguistics.

That common space was enabled by the fellowship program itself, which threw researchers from linguistics, psychology, and computer science into the same meeting spaces. The result: a richer approach to a deep scientific question about memory.

For Murphy, his research employs information theory to demystify AI’s notorious “black box” problem—making deep learning systems more transparent, interpretable, and reliable.

“I try to make models more interpretable by tracing the flow of information—tracking where each piece of data originates within the dataset,” Murphy explains. His work examines how information flows through neural networks, identifying which parts of input data most influence an AI system’s final conclusions pertaining to a task it’s instructed to execute.

One of his favorite tools is something quite familiar: lossy compression, which cleverly discards extraneous data from JPEG images or MP3 audio. “I use lossy compression not for reducing file sizes, but for pointing out what the important information is in data,” Murphy says. In other words, by strategically “compressing” a dataset—be it sensor readings from hospital patients or behavioral data from experiments—his algorithms reveal which variables or features carry the real signal amidst the noise.

For example, Murphy has applied this approach to medical records. Imagine a patient who arrives with results from 50 diagnostic tests. His approach can sift through those results to identify which tests contain the information most useful for predicting the patient’s condition. It serves as a direct tool for scientists drowning in data, helping them to spot key patterns that merit attention—like highlighting a needle of insight in a haystack of numbers. It also offers a glimpse into how AI itself operates.

“What’s powerful is you can use this both to learn about the world, and then you can also use it to learn about the models,” he explains. After using compression to expose which factors matter in, say, a biological dataset, Murphy can “turn it around” and apply the same idea to the inner guts of a neural network.

This theoretical approach has found practical applications through collaborations fostered by the fellowship. Murphy worked with DDDI fellow Sam Dillavou, a physicist who is generating experimental data, to explore how information theory tools might extract meaningful patterns from complex datasets.

In their paper, Murphy and Dillavou introduced new metrics in information theory to rigorously compare the internal representations of different AI models. Their method provides quantitative ways to analyze how meaningful information is “actually captured” within neural networks. They demonstrated that models consistently used the same “words” to describe data, laying critical groundwork for more interpretable, trustworthy AI systems—ones that clearly show how and why they arrive at their conclusions.

In doing so, Murphy is asking questions that border on philosophical. “What does it mean for a machine learning model to ‘understand’ something? How can we distill knowledge to its essence, whether in a human brain or an artificial one? These are the kinds of big-picture reflections that flourish in such an academic setting,” he says.

Looking ahead, Bhuvnesh Jain, who co-directs the SAS DDDI; Penn Integrates Knowledge University Professor René Vidal, who directs the SEAS Innovation in Data Engineering and Science Initiative; and Twomey anticipate that Penn’s commitment to integrating AI into science will continue expanding significantly. Amy Gutmann Hall, Penn’s new state-of-the-art building, will soon serve as a central hub for data science and AI, intentionally designed to foster cross-disciplinary interactions.

Fellows will also soon have access to the Penn Advanced Research Computing Center (PARCC), featuring cutting-edge computational hardware such as high-powered GPUs capable of managing massive data streams and complex simulations. This increased computational power will greatly accelerate collaborative research projects.

What’s more, the AI x Science program is actively looking to broaden its horizons by recruiting researchers from an even wider range of disciplines. Notably, in partnership with Marylyn Ritchie, vice dean for AI at Penn Medicine, and Li Shen, professor of informatics in biostatistics and epidemiology, this year’s cohort expanded to include postdoctoral researchers from Penn Medicine, who are supported by the Institute for Biomedical Informatics. Wharton AI fellows are expected to join in the fall. Eric Bradlow, vice dean of AI and analytics at Wharton, has been an enthusiastic partner in enabling this next step.

Griffin Pitt, right, works with two other student researchers to test the conductivity, total dissolved solids, salinity, and temperature of water below a sand dam in Kenya.

(Image: Courtesy of Griffin Pitt)

Image: Andriy Onufriyenko via Getty Images

nocred

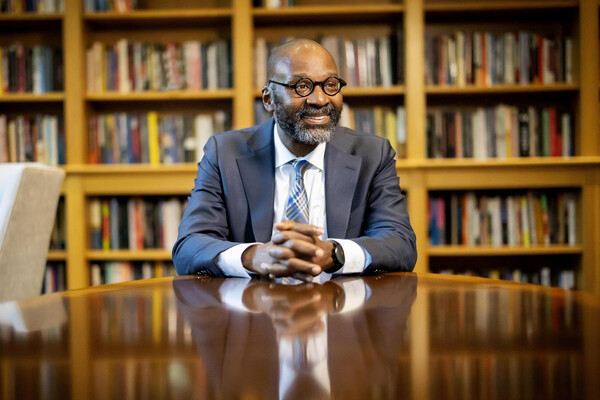

Provost John L. Jackson Jr.

nocred