nocred

The year 2020 will go down in history as one drastically shaped by a virus that, as of late October, had infected more than 40 million people worldwide. Apt assessments have compared what’s happening now to the devastations of the 1918 flu pandemic. But what’s different today is how technology has allowed us to see, almost in real time, where the virus is spreading, how it’s mutating, and what effect it’s having on economies across the world.

This detailed view of COVID-19 is made possible thanks, in part, to a new generation of huge datasets—hundreds of genomes, millions of tweets—along with advances in computing power and the analytical methods to study them. Of course, massive datasets play different roles depending on the field using them. To provide some context, Penn Today spoke to experts across the University about how they and others are employing data to identify patterns and find solutions to the many challenges raised by the ongoing pandemic.

Seemingly subtle differences in individual-level human behavior—whether you stay in your house, leave and go for a walk, get on a train, go to work—all have profound consequences for how COVID-19 spreads. If you want to model that, it’s helpful to have granular, individual-level movement data. We’re working on doing that exact thing, taking standard epidemiological models and inferring very detailed networks, breaking down the city of Philadelphia into small chunks, then estimating contact rates between groups based on individuals visiting individual locations. Then we can run these models forward and try all kinds of control strategies on future caseload data.

This type of epidemiological modeling is a dramatic leap forward. Before, researchers would create these complex, agent-based models, but they would have to use indirect data like airline traffic or school attendance. Now, you have data on real people moving around. You can see what they’re actually doing and how their behavior changes when lockdown policies go into place. It’s drastically improving our ability to model the spread of disease and could have profound consequences for future pandemic response.

What happened this year with COVID could’ve happened in 2003 with SARS and any given year in between. The threat of novel viruses jumping from animals to humans is something we’ve been worrying about for decades. It turns out, we were really right to be worried and maybe we weren’t worried enough. It’s really important to do the best possible job we can of using models to make predictions and then using those to design optimal policies.

I’m an economist by training and I think about data all the time. In my area of content expertise, student absenteeism and truancy, I’m thinking about how we measure if kids are coming to school, and whether that is a good measurement. I had a book that came out in 2019 [“Absent from School: Understanding and Addressing Student Absenteeism”] on these two questions: How do we know if we’re measuring attendance correctly and how do we know if we’re seeing effects on student performance?

Now with the pandemic, it’s brought up a whole huge list of measurement issues and effects issues. How do we know if kids are coming to school if it’s online? What counts as attending? What happens if the kid is there with the screen on but he or she has to take care of younger siblings who are in the house? What it means to come to school is all of a sudden really different.

What would be an amazing big data project is to use the sign-in data and even more so the movement data—when they’re turning their screen off, for instance—to see how they’re responding to online instruction. There’s going to be a huge COVID boom in research, but I think the conclusions from the most impactful research will actually say more about the conditions in our education system pre-COVID. That’s what I’m excited to see.

Data is a huge part of elections and campaigning. More recently, starting in the 2004 election, candidates in both presidential and local elections started putting together teams of data people (that was before they were called “data scientists”) because they recognized the potential data could play in how to run a campaign. Campaigns use national voter registration databases, combined with consumer-level data to figure out a voter’s political leanings, to target voters who are persuadable as well as voters who like a candidate but are on the fence about voting.

Now, because of the pandemic, the process of reaching out to voters is different. Political rallies, for example, require you to register as a mechanism for campaigns to get lists of people who like a candidate enough to come to a rally. One anecdote from this year is Trump’s Tulsa rally, which had underwhelming numbers because there were people who registered with no intent on going, which contaminated their database.

In terms of the pandemic’s impact on the future, one question will be if individuals will keep voting by mail. It may be that levels will come down from what we see this fall but maybe they will settle higher, which could impact how campaigns target voters in the future.

Data science is a powerful tool that can help answer difficult questions about society. It provides impartial methods for drawing conclusions about crime, poverty, and a host of pressing social issues. But as with any powerful tool, it involves risks, partially because a fallible human being is always doing the analysis.

As a statistician, I believe data science should happen transparently. In statistics, we go from a sample of observed data to an inference about what’s happening generally to predictions from those data. But what assumptions did the researcher make to draw those conclusions and how realistic are the assumptions? How certain can we be about the conclusions? What are the data’s biases?

For example, we can’t observe everyone who has contracted COVID-19. We only know about patients who have been tested and who have positive results. Even within those, we don’t know which are false positives. We might not even know how common false positives are if test providers aren’t transparent about error rates. So, we know the dataset will have biases, and we have to work with that. The key is transparency and providing a clear measure of uncertainty in the results. Whether policymakers use data science to inform their decisions is a different question.

In terms of crime, questions of causality are interesting: Have lockdowns increased shootings during the past few months? Or did crime rise because of increased unemployment, warm temperatures, a combination of these, or something else? With the pandemic, it’s hard to answer these questions because so much changed at once. Answering them properly will require clever research designs, longer-term data collection, and careful data analysis. It will likely be some time before we have a true sense of why certain social changes happened during the pandemic.

COVID has led to many changes that present an opportunity for natural experiments to evaluate the built environment of a city. For example, remote working is reducing car usage and freeing up urban space, such as restaurants using parking lanes for outdoor terraces. These changes give us the opportunity to see if, for example, outdoor terraces lead to more vibrancy.

COVID has also increased the prominence of the technologies that are used for getting high-resolution data on cities. Studies of social distancing using cell phone data have made people more cognizant of movement tracking, and how these tracking metrics can be used to study the use of public spaces in general.

In addition, the Black Lives Matter movement has increased awareness of the inherent biases contained in quantitative approaches to crime, such as predictive policing. There is a wider understanding that algorithms trained on biased data can disadvantage certain subpopulations. In the future, I hope there will be an increased call for thinking about the discriminatory biases that can result from using big data to make cities more efficient. Viewing justice as an equally desirable paradigm to efficiency is something that I’m hopeful comes out of the trauma that has been 2020.

Within Penn Medicine I’ve been part of a team led by Chief Research Information Officer Danielle Mowery and our Information Services that is putting together a COVID-19-specific data warehouse, called I2B2, used by institutions around the world. Let’s say an investigator at Penn wants to do a query to find out how many COVID patients over the age of 60 on a certain medication there are. They can do that and find out which institutions have those patients and then collaborate with them. It’s what we call a federated model, so patient data stays at each site, which helps with data privacy and security.

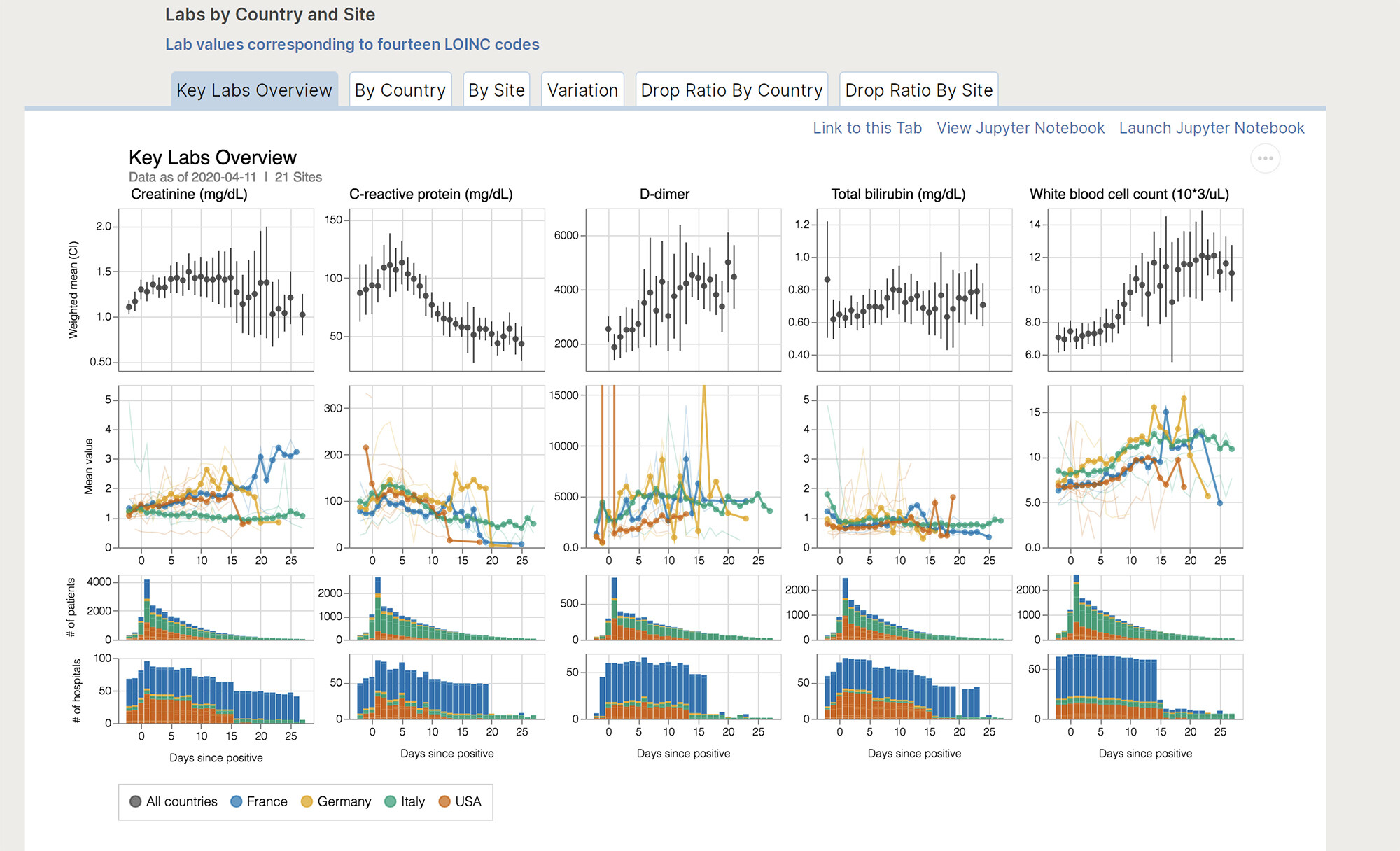

We also participate in an international COVID consortium, 4CE, that has data standardized so we’re all talking about the same thing. There’s a common set of analytic tools so if someone in France sees something interesting in their data, they can run their analysis on our Penn data to see if that pattern holds here. We’ve already published a research paper using that platform and have five or six more in the works.

And personally, I’m very interested in the heterogeneity of the disease: why there is so much diversity in symptoms and health outcomes. We’re actively developing machine learning, artificial intelligence methods to better understand the patterns at play.

I think by January we’re going to be in a really strong position to settle into a structured, rigorous scientific approach to COVID. This disease isn’t going away, and we’re going to be able to use these data resources to ask the right questions and get the right data and collaborators to find some helpful answers.

Our work takes whole genomes, finds variants between them, and uses computational statistical approaches to determine if there are signatures of natural selection acting on them. That’s what we did in a recent study, looking at four genes that play a role in the entry of the SARS-CoV-2 virus into cells. Our findings uncovered some variants that have been under natural selection in the past.

We also incorporated data science, working with Anurag Verma, to use electronic medical record data from patient samples from the Penn Biobank. We found some interesting correlations between what we found genetically and a patient’s clinical course of COVID. So that gives us a little bit of a clue about the functions of these variants.

A bigger project we’re in the early stages of pursuing will involve working with the African immigrant and African American communities in West Philadelphia. We’re hoping to understand the prevalence of COVID-19 in those communities and to look for correlations between the risk of severe disease and individual risk factors: social, genetic, and geographic. We’ve been reaching out to community organizations and local leaders, and we’re including a whole host of experts from Penn—bioethicists, social scientists, epidemiologists, and more. We want to make sure that the community members are true partners in this effort and that we have a way to get the information back to them. It’s going to be a huge undertaking but it’s so important to understand the risk factors for disparities in prevalence and severity of COVID-19, and to use them to try to improve public health.

This is one of the first times we’ve seen this close of a feedback loop between data science and public policy, with reopening plans and policies all being informed in real-time by data. Data is also being used to develop therapies and vaccines; now, during the approval process, there’s going to be much more public scrutiny on the data, so the role of a statistician to help the public interpret results is crucial.

There’s also a huge amount of personal data we’re releasing because we want to fight COVID, and we have opened the door to allowing our data to be surveilled in ways we never would have allowed in the past. Data ethics is something that has always been important, and that people have been studying, but it’s going to become even more important to make sure we’re using this personal data for the right purposes.

Penn has always had close connections between Engineering and other schools, but I think that the current crisis presents new opportunities for increased engagement and collaboration. The education of students in data science is also imperative. There are data science courses cropping up all across campus—for example, this fall’s Big Data Analytics course has 400 students from 50 different majors across campus—which shows that there’s a huge interest in data science from many different perspectives across campus.

The keys for data science to succeed are interdisciplinarity and the desire to work together, and Penn is a great place for doing that.

Homepage image: Apt assessments have compared what’s happening with COVID-19 to the devastations of the 1918 flu pandemic, but what’s different today is how technology has allowed us to see, almost in real time, what its many wide-ranging impacts have been.

Katherine Unger Baillie , Michele W. Berger , Erica K. Brockmeier

nocred

nocred

Despite the commonality of water and ice, says Penn physicist Robert Carpick, their physical properties are remarkably unique.

(Image: mustafahacalaki via Getty Images)

Organizations like Penn’s Netter Center for Community Partnerships foster collaborations between Penn and public schools in the West Philadelphia community.

nocred